Juan Huerta

"I am Juan Huerta, a specialist dedicated to optimizing nutrient delivery in vertical farms through underground robot vision feedback systems. My work focuses on developing sophisticated monitoring and control frameworks that utilize advanced robotics and computer vision to enhance the efficiency of vertical farming operations. Through innovative approaches to agricultural technology and automation, I work to improve crop yields and resource utilization.

My expertise lies in developing comprehensive systems that combine underground robotic platforms, advanced vision sensors, and precise nutrient delivery mechanisms to create optimal growing conditions. Through the integration of real-time visual feedback, environmental monitoring, and automated control systems, I work to maximize the effectiveness of vertical farming operations while minimizing resource waste.

Through comprehensive research and practical implementation, I have developed novel techniques for:

Creating underground robot navigation and mapping systems

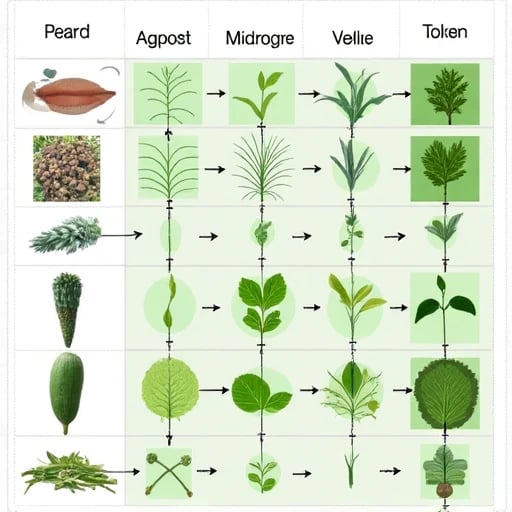

Developing real-time root zone visualization platforms

Implementing precise nutrient delivery control mechanisms

Designing automated environmental monitoring systems

Establishing protocols for growth optimization based on visual feedback

My work encompasses several critical areas:

Agricultural robotics and automation

Computer vision and image processing

Nutrient delivery systems

Environmental monitoring and control

Vertical farming technology

Resource optimization and sustainability

I collaborate with agricultural engineers, robotics specialists, computer vision experts, and plant scientists to develop comprehensive optimization solutions. My research has contributed to improved crop yields and resource efficiency in vertical farms, and has informed the development of more sustainable agricultural practices. I have successfully implemented vision-based control systems in various vertical farming facilities worldwide.

The challenge of optimizing nutrient delivery in vertical farms is crucial for improving agricultural productivity and sustainability. My ultimate goal is to develop robust, efficient control systems that enable precise management of growing conditions through visual feedback. I am committed to advancing the field through both technological innovation and agricultural expertise, particularly focusing on solutions that can help address global food security challenges.

Through my work, I aim to create a bridge between traditional farming methods and modern technological approaches, ensuring that we can maximize crop productivity while minimizing resource waste. My research has led to the development of new standards for vertical farming operations and has contributed to the establishment of best practices in automated agriculture. I am particularly focused on developing systems that can adapt to different crop types and growing conditions while maintaining optimal nutrient delivery patterns."

“Deep Learning-Based Phenotypic Analysis of Greenhouse Crops” (2023): Developed a lightweight CNN model for plant images, validating visual data’s efficacy in growth prediction.

“Soil Nutrient Monitoring System with Multi-Robot Collaboration” (2022): Designed communication protocols for underground robot swarms, offering hardware integration insights for this study.

“Limitations of GPT-3 in Agricultural Q&A” (2024): Highlighted gaps in public models for domain-specific tasks (e.g., agronomic parameter interpretation), justifying this project’s fine-tuning needs. These works demonstrate my methodological expertise and problem-awareness in agricultural AI.

Recommended past research includes:

This research requires GPT-4 fine-tuning for three reasons:

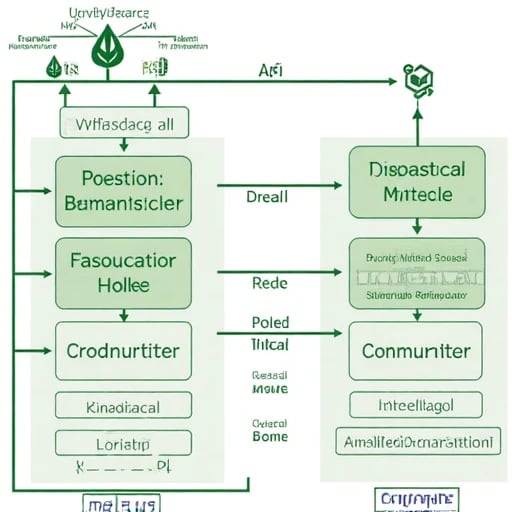

Complex Scene Parsing: Underground root images contain noise, low light, and overlapping structures, necessitating GPT-4’s enhanced visual understanding (e.g., spatial attention mechanisms) for feature extraction.

Multimodal Alignment: Fusion of image data and sensor parameters demands GPT-4’s superior cross-modal alignment capabilities to reduce semantic gaps compared to GPT-3.5.

Real-Time Decision Requirements: Vertical farms require millisecond-level responses; GPT-4’s optimized inference speed meets dynamic control conditions. Publicly available GPT-3.5 lacks adaptability to agricultural terminology and root datasets, and its fine-tuning flexibility is limited. GPT-4 enables customized prompt engineering and domain knowledge embedding, ensuring outputs align with agronomic constraints.